Configuration

What is the format of the configuration file for an AWS Blu Age Data Migrator project?

The INI format

Which types of input data can be used by the AWS Blu Age Data Migrator?

CSV, EBCDIC and QDDS

Which database driver versions are compatible with the AWS Blu Age Data Migrator?

Compatible database drivers:

- MSSQL: 12.6.0.jre11

- ORACLE: 23.7.0.25.01

- POSTGRESQL: 42.7.7

- DB2LUW: 4.33.31

These jars are available through the links below.

MSSQL - https://go.microsoft.com/fwlink/?linkid=2259203

Note: Unzip the downloaded sqljdbc_12.6.0.0_enu.zip and find mssql-jdbc-12.6.0.jre11.jar under the enu > jars folder.

ORACLE - https://repo1.maven.org/maven2/com/oracle/database/jdbc/ojdbc11/23.7.0.25.01/

POSTGRES - https://jdbc.postgresql.org/download/postgresql-42.7.7.jar

DB2LUW - https://www.ibm.com/support/pages/db2-jdbc-driver-versions-and-downloads

Where we need to put the driver files to configure the AWS Blu Age Data Migrator?

Follow these steps:

- Create a folder 'drivers' in your migration project root folder, i.e.

migrationProjectPath. - Create the folders (MSSQL, ORACLE, POSTGRE, DB2LUW) in folder 'drivers'.

- Put in each folder the driver corresponding to each database (See driver versions).

How to add Oracle Service Name information to the secret in the AWS Secret Manager ?

By default, AWS Secret Manager doesn't provide a field to store the Oracle Service Name. Hence, with the help of edit option, add a row with key as 'dbservicename' and provides the corresponding value.

How can I create a custom PostgreSQL database using the AWS Blu Age Data Migrator?

Create a SQL file with custom database creation statement. Get the absolute or relative path of the file and give it as input for the parameter stepDatabaseCreateScriptPath.

Note:

- This parameter takes into consideration only if the value of the property stepDatabaseKillDropCreate as true.

- This script is not limited to database creation. Hence, it's responsibility of the user to provide the proper statements in a SQL file.

How to install BCP Utility for MSSQL loading data?

The installation guide is available here.

How can I insert low-level data in postgreSQL database?

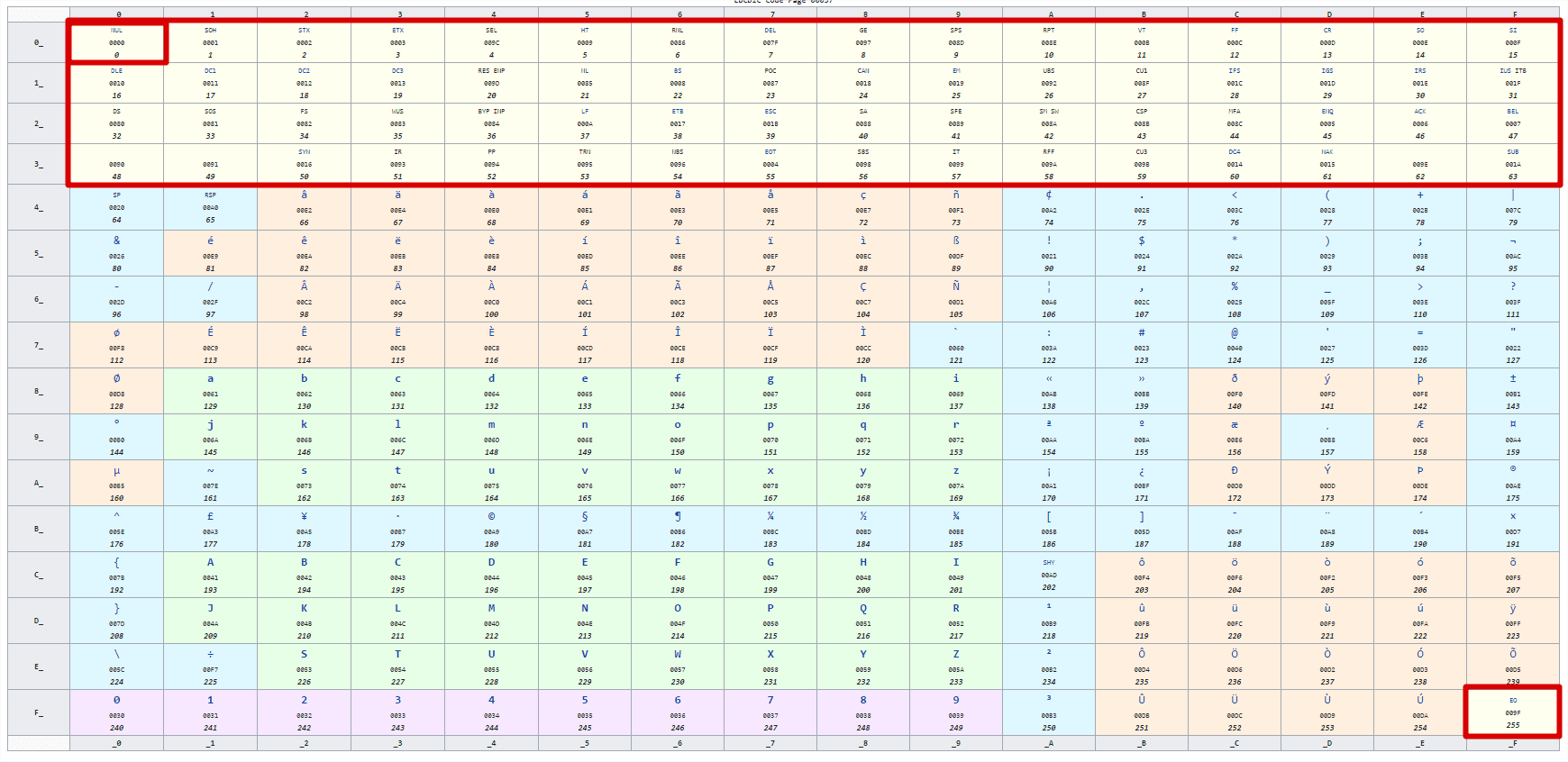

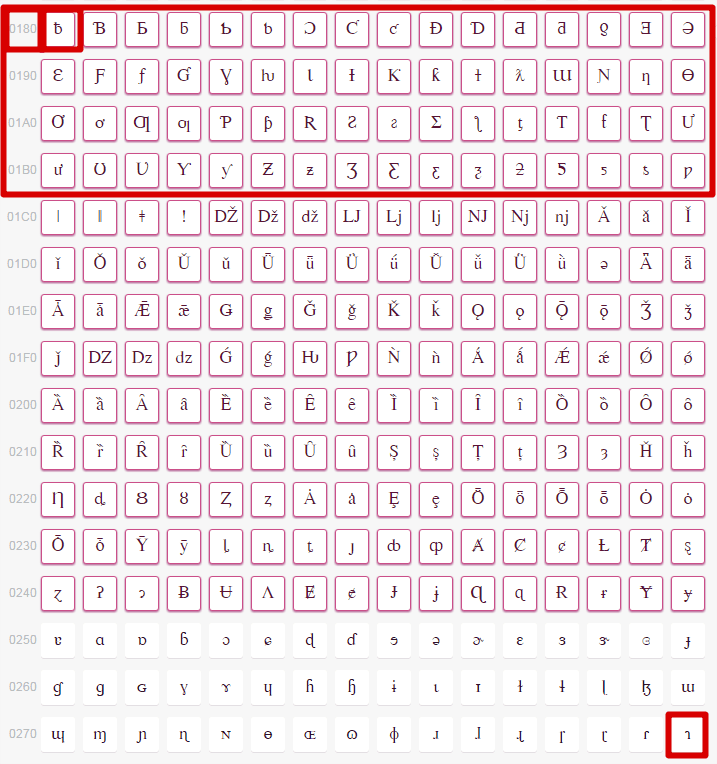

For CHAR and VARCHAR column, it consists in transposing the control characters from the EBCDIC page code to a UTF-8 compatible page code containing 256 non-confusing characters.

These characters are transposed to the Latin Extended-B page + the first 3 lines of the International Phonetic Alphabet page. The Latin Extended-B page starts with the character LATIN SMALL LETTER B WITH STROKE, unicode point code 0180. The EBCDIC control characters are (in general for all EBCDIC page codes) on the first 16 * 4 characters + the last character.

The first character is LOW-VALUE, the last is HIGH-VALUE.

Example:

The program inserts LOW-VALUE, 0-byte is converted to 0x180, the ƀ character is inserted into database.

The program reads the ƀ character, 0x180 is converted to 0-byte, the program gets 0-byte.

How to Handle specific added technical columns?

When you try to load data coming from PF files in AS400 context, Analyzer tool add technical columns to each table scripts. However those additional data are not contained in data file provided by customer, so the Data Migrator provides a mechanism to add artificially those missing data in Data loading step.

The tool try to reach out the fixed-values.properties file into the targeted input folder. If the file is found, it is considered. He should looks like this :

TABLEPF1/TABLEPF1=NOW,NOW,TABLEPF1,TABLEPF1 TABLEPF2/TABLEPF2=NOW,NOW,TABLEPF2,TABLEPF2 ....

To each inserted row, the tool will add those column values.

To prevent to add a new entry in this file for each new table (PF), the defaultFixedValues property exists for a loading step.

In most case, customer data comes from a unique Datafile and the sample describe above is enough.

In business term, it allows to load different DataFiles (AS400 data) by managing specific partitions for them.

This property will be used for each table that do not appear in the fixed-values.properties files.

The fixed values can still be overridden for a particular table if needed using the fixed-values.properties file.

When defining the Fixed values, you can use the special keywords: FOLDER and FILENAME that will help populating the srcfile and member column.

Example:

defaultFixedValues=NOW,NOW,FOLDER,FOLDER

FOLDER and FILENAME will be evaluated for each file being processed in the data folder.

Meaning of the special keywords:

FOLDER: last folder in the path of the file, without the separators

Example: C:\migration\data\DataFIles\PKTTYP\FILE1.txt => FOLDER = PKTTYP

FILENAME: Data file name, without the extension

Example: C:\migration\data\DataFIles\PKTTYP\FILE1.txt => FILENAME = FILE1

How to Solve Operating Trouble in PostgreSQL?

The engine performs a full scan rather than using defined indexed for plan execution. You have to add an ANALYZE command in a SQL script, in a last step that enables to update PostgreSQL statistics to help determine the most efficient execution plans for queries.

What is the IMS EBCDIC unload record format supported by Data Migrator?

In IMS database environment, data can be shared thru utility file format IXB (Index Builder) that contains index information for faster data access and retrieval.

Data Migrator has complete support to interpret IMS extract file IXB, which follows below extract layout. Data Migrator expects one IXB file for each DBD.

| Columns | Field | Description |

| 1 ~ 2 | Logical number order | Used to sort the file, so logical children are inserted after logical parents. This is "01" for normal segments, "02" for logical children and either dependent segments. |

| 3 ~ 4 | Database number | The PCB number used to extract the data, for logical relationships the primary database specified on the Extract panel has a value of 1. Logically related databases have a value of 2, 3, and so on. |

| 5 ~ 8 | Extract order | Order in which the IMS segments were extracted by FM/IMS |

| 9 ~ 16 | DBD name | DBD of one of the databases involved in the extract |

| 17 ~ 18 | Segment code | |

| 19 ~ 26 | Segment name | |

| 27 ~ 28 | Reserved | Set to low values (x'00') |

| 29 ~ 32 | Concatenated key length n | Actual concatenated key length for this segment |

| 33 ~ (33+n) | Concatenated key | |

| (33+n) ~ (33+n+4) | Record size m | 4-byte indicating record size |

| (33+n+4) ~ (33+n+4+m) | IMS segment data | This starts at 33+Max concatenated key length |

What is the IMS CSV record format supported by Data migrator?

Data Migrator expects the csv record to contain an extra column of concatenated key as the first column, while rest of configuration remains the same. Data Migrator expects one csv file for each table.